Chris is missing the point of both technologies. And I'm sure not a brat Millennial.

Marzipan (candy frosting) is a legacy porting technology: Existing iOS apps can cost more to port to AppKit than they're worth, but may be worth something as a cheap Marzipan port. Nobody ports their iOS apps to tvOS or watchOS because it's not profitable, and everyone (in the first world with money) has an iPhone already.

I loved my UNIX® workstation Macs after suffering with Linux for a decade+, but Timmy Cook's Apple abandoned the Mac after Steve's death and Scott Forstall's firing. Anyone making new native Mac apps is in an abusive relationship: Apple does not love you, and does not care about the Mac.

I'd rather eat broken glass than run Linux again, and I have never and will never be a Windows weenie, but I'm not relying on Apple to support desktop developers ever again.

Apple's Mac apps have generally been shit for years now, because they won't spend the resources to develop & support their own stuff. iTunes is a bloated pile of crap, half-broken because it has to run on Windows, too; you don't like hybrid web apps? Everything except your library is web or XML rendering. Pages and Numbers were fast, minimally useful apps that got rewritten based on the iOS versions, and are just about useful for a memo or a chart, but not real work. Mail's a clusterfuck and not half as useful as when it supported more scripting and addons.

The new Mac commercials, first in years, show the broken-keyboard laptops and models they no longer make, nobody coding Mac apps, no desktop Macs. Where's that shiny "new" iMac Pro from last winter? Isn't that what a real musician would use? A near-blind photographer squints at a tiny Mac laptop instead of a giant 27" retina display?

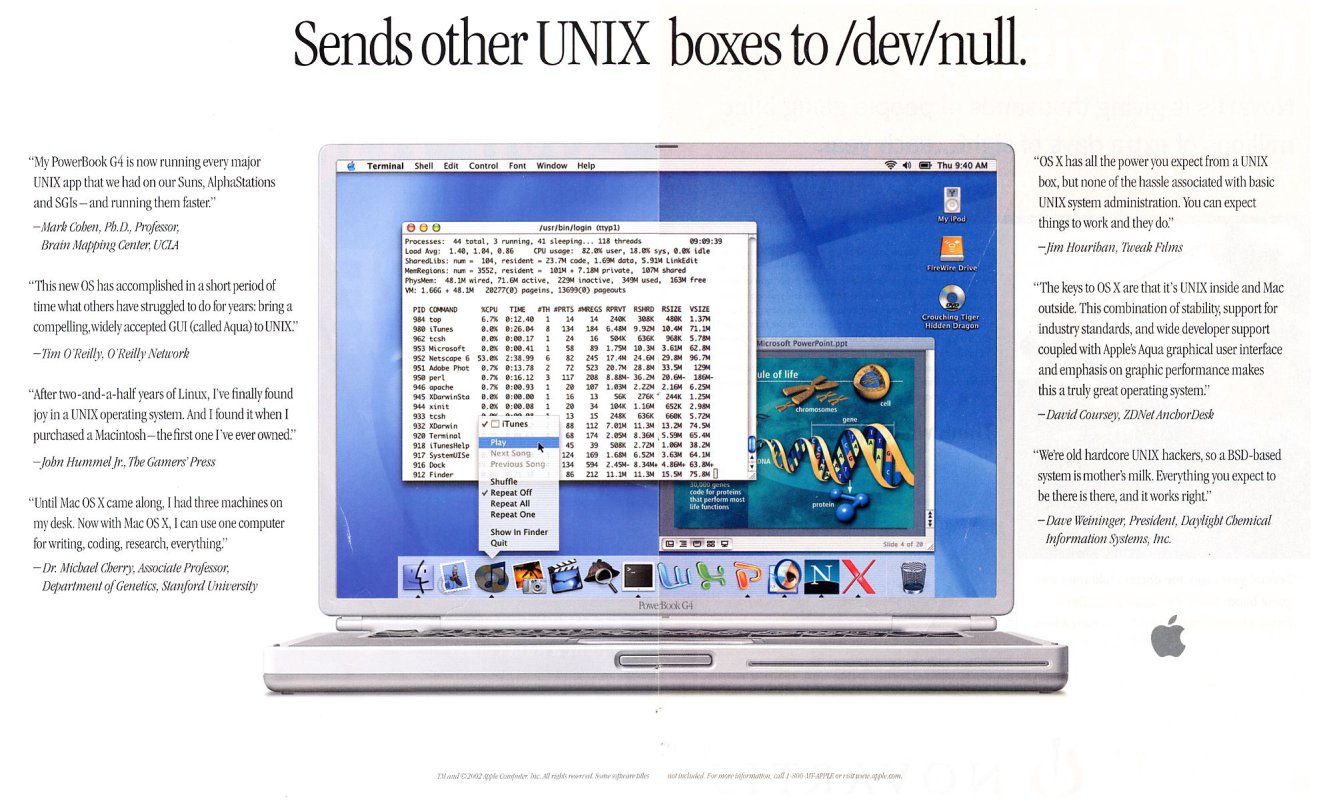

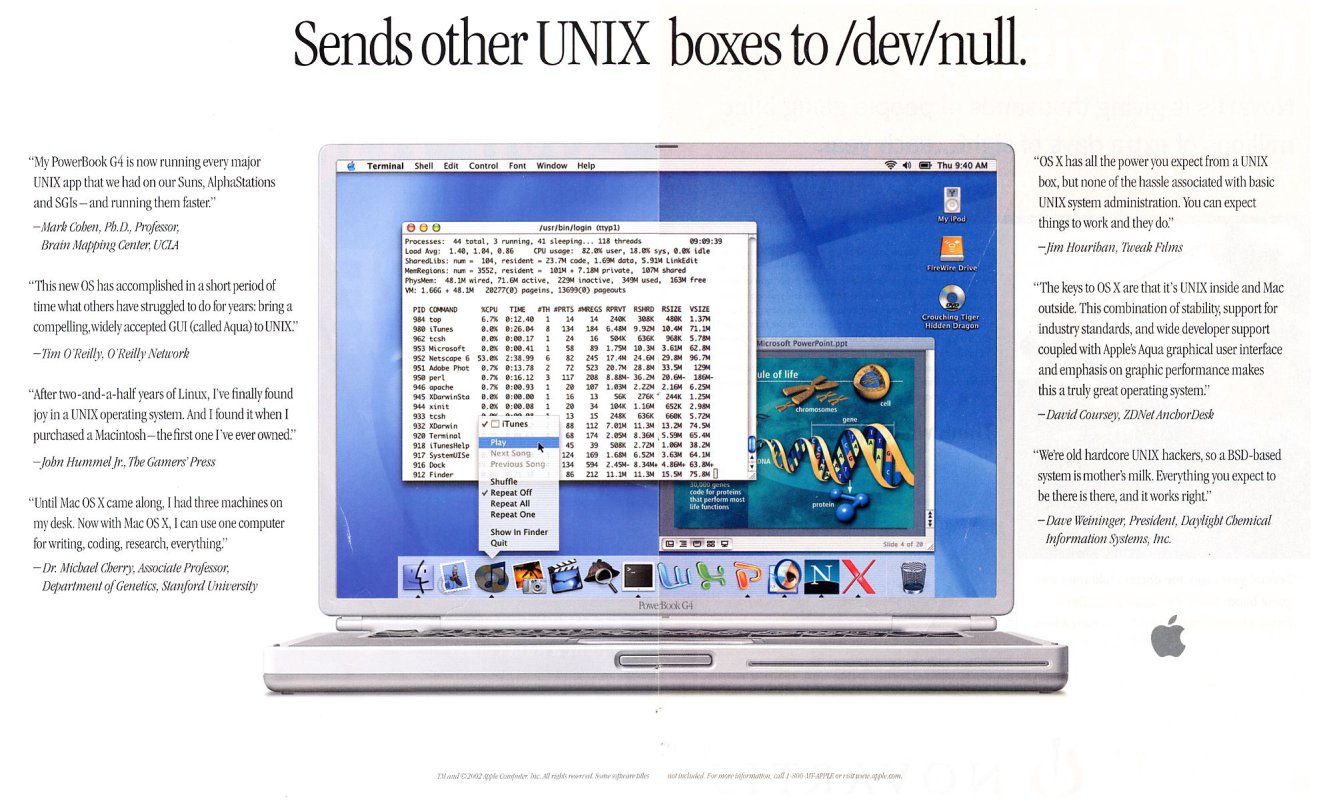

This is how technical, developer-oriented Apple ads were in 2002:

Electron and Node are the future (along with Elixir, Go, Rust, maybe others?). It's 100x faster and more fun (attach the FunMeter™ somewhere fun) to code in than Swift, you can use any good editor instead of fucking Xcode, do layout with HTML/CSS instead of the rotting corpse of Interface Builder trapped inside Xcode. And it's cross-platform; 95% of the users (even in the first world with money) don't run Mac, because Apple never updates the Macs, they failed utterly to follow-through with Macs behind iPhones. There's 20x bigger market potential.

The future is certainly not banging your head against the Swift and Xcode walls, just to make a pure Mac app nobody will see. You can't make fun of Electron's runtime, which needs Node and Chromium, if you use Swift, which has a giant runtime turd because their amateur hour C++ compiler nerds can't make a stable ABI. The Mac's only future source of native apps is Marzipan ports.

There can be performance problems in Electron, but Slack's an outlier at 196MB binary and devouring 1.2GB RAM(!!!); it's the bloated WalMart-shopping fat-ass of Electron apps. Discord is also Electron, it has a 136MB binary and uses 360MB RAM, and does more, faster and better than Slack. Atom is the original Electron, has a 541MB binary, and uses 600MB RAM, for an entire editor/IDE.

My game currently has a 139MB binary, and uses 200-300MB RAM when running. Comparing to a random casual game from my Steam library, Chainsaw Warrior (well, "casual"; I've only beaten it once on Easy). It's based on Unity (another VM!), has a 249MB binary, and uses 200MB RAM when running, plus Steam itself uses 130MB RAM (I may yet integrate Steam into mine, so that may even out). It doesn't seem excessive.

I can't compare my Swift game prototype from 3 years ago, because it was written in a version of Swift that doesn't compile in any Xcode that runs on current hardware & OS, and Xcode "helpfully" deleted the built binary; who needs working binaries, right? I might have an old Xcode on my old laptop? Maybe I could waste a couple days fixing the code by hand in current Xcode, if I hated myself or loved Timmy Cook's Apple that much?

New languages evolve fast, but I can run 20-year-old Javascript and it'll run thousands of times faster than it did in the '90s, because the language was improved with forwards-compatibility in mind, hardware caught up, and the newer VMs compile & run it faster. I can compile 30-year-old Objective-C, and it'll run.

We had something similar to web tech 20 years ago with desktop Java, but the convicted criminal organization Microsoft sabotaged it and made a shitty single-platform ripoff called C#. Viruses became a problem for applets, which had nothing to do with desktop Java, but killed Java deployment even before Oracle bought & ruined SUN. Android runs on another Java ripoff, but their dev tools and APIs are even shittier than Xcode or C#, and the users are poor, so why make anything for them? Server-side development in Java, Clojure, or Scala, running on the Java VM, is hidden away in a back room, and made as boring as possible.

So now we have to reinvent the runtime, this time with Node & Chromium. OK with me.