December 9, 1968, Douglas Engelbart's presentation of NLS and teleconferencing:

- Youtube: This is at 360p, most other uploads are at 240p fuzzy mud, I'd love to have a good HD one where I can read the text. Alas.

- TheDemo@50

"If in your office, you as an intellectual worker were supplied with a computer display, backed up by a computer that was alive for you all day, and was instantly responsible—responsive—to every action you had, how much value would you derive from that?"

Of course, in reality what we mostly do with that is look at social media, hardly any better than watching TV. But we could do more.

It's been years since I've watched this, and some things jump out at me as I rewatch:

The keyboard beep is infuriating, it's what I consider an error sound. And Doug's fumbling a few times, which suggests the keybindings aren't visible, well-organized, or practiced yet. We see later that they're just code mapped to keys in a resource list.

The NLS word demo is somewhat like a modern programmer's editor with code folding; but notably I don't ever use folding, it's slow (even on 1 million times faster machines!) and error-prone, sucking up far more text than expected. It's also a lot like outliners like OmniOutliner; but while I do sometimes use OO to organize thoughts, I would never keep permanent data in it, because I can't get it into anything else I use. Dumb text is still easier and more reliable; I put my lists in Markdown lists:

- Produce

+ Banana

* Skinless

Maybe the answer is we should have better tools and APIs for managing outlines? Right now I can manage dumb text from the shell, or any scripting language, or with a variety of GUI tools. OmniOutliner's "file format" is a bundle folder with some preview images and a hideous XML file with lines like:

<item id="kILRUkulXwk" expanded="yes">

<values>

<text>

<p>

<run>

<lit>Stuff</lit>

</run>

</p>

</text>

</values>

<children>

Nothing sane can read that; even if I use an xml-tree library, it's still item.values[0].text.p.run.lit to get a single value out!

If I export it to OPML, it loses all formatting and everything else nice, but I get a more acceptable:

<outline text="Stuff">

Back to the demo.

The drawing/map editor's interesting. This is pretty much what Hypercard was about, and why it's so frustrating that nobody can make a good modern Hypercard.

Basically every document seems to be a single page, fixed on screen. If a list gets too long, what happens? It doesn't scroll, just fully page forward/back.

Changing the view parameters is basically CSS; CSS for the editor! Which is what makes Atom so powerful, but it's not easy to switch between them, probably have to make your own theme plugins, or just a script to alter the config file and then reload the editor view.

Inline links to other documents in your editor, is interesting. Obviously we can write HTML links, but they have to be rendered out and no editor can figure out where a reference goes and let you click on it. Actually, this does work in Vim's help system, but nowhere else.

The mouse changed three ways since then: The tail moved to the top, the wheels became a ball which drives two roller-potentiometers inside, and then was replaced with a laser watching movement under a window. Don't look into the butthole of your mouse with remaining eye. But the basic principle of relative movement of a device moving the pointer, rather than a direct touch like Don Sutherland's Sketch light pen, or modern touch screens, or a Bluetooth stylus, remains unchanged and still the fastest way to point at a thing on a screen. Oh, and the pointer was called a "bug" and pointed straight up, Xerox copied this directly in their Star project, while everyone since Apple has used an angled arrow pointer.

The chording keyboard never took off, and I've used a few, and see why: It's incredibly hand-cramping. While a two-handed keyboard is awkward with a mouse, you have room to spread your fingers out, and only half the load of typing is borne by each hand. On a chord, each finger is doing heavy work every character.

The remote screen/teleconferencing setup is hilarious: a CRT being watched by a TV camera, which runs to a microwave transmitter; they couldn't send it over phone lines, acoustic coupler modems were only 300 baud (bits per second, roughly) at the time.

As with Skype today, every chat starts with "I can't hear you, can you hear me? Fucking (voice chat system)." Later, audio drops out, and all Doug can do is wave his mouse at the other presenter. I've joked before that the most implausible thing in Star Trek isn't FTL, even though that's physically impossible; it's not aliens indistinguishable from humans with pointy ears, half black/white makeup, or bumpy foreheads; it's that you meet an alien starship and can instantly set up two-way video conferencing.

They seem to have a mess of languages; MOL (Machine Oriented Language) is a macro assembler in modern terms. All the languages have to be adapted to NLS, they couldn't just use LISP or FORTRAN. Since changes are recorded by userid, they had git blame!

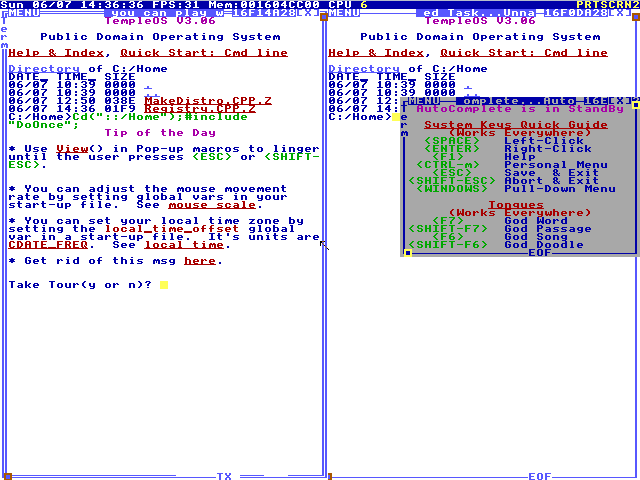

Split screen! That's a thing I love, and few editors do. You can drag a bar down from the top in BBEdit, and Atom has "Split up/down/left/right" for panes, but then you have to re-open the document in each and it's a pain.

Messaging is a public board (or rather, an outline with each statement as a message), with #INITIALS for addressing, like @USERNAME in the Twitters and such. Like those, there's too much data to process for live updating, everything runs as a batch job that can crash the database. War Computing never changes.

Cold & hot retrieval are just file search; on the Mac we have Spotlight, and can search by keywords or filename. Though I have some problems with the cmd-space search these days, and mostly open Finder and search from there to get a list of files matching various requirements, or sometimes use mdfind whatever|less from shell, then winnow down "whatever" until I have only a few results. On Windows or Linux, you're fucked; get used to very long slow full-text searches.

What NLS Did, and How We Can Do That

- Mouse, Keyboard, bitmapped displays: We have that.

- Teleconferencing: Still sucks.

- System-Wide Search: Mac users have that, everyone else is boned.

- It's faster on Linux or Windows to search Google for another copy of existing data than to search the local machine.

- Outlining to enter hierarchical data: Nope.

- All data goes into outlines contained in files.

- Code as data: Some data is program instructions, in a variety of languages, which can operate on outlines.

- To enter this outline, I had to keep adjusting my numbers, because I'm writing it in markdown text.

As mentioned above, OmniOutliner is logically very similar, but it's a silo, a trap for your data. The pro version (WHY not every version?!) lets you use Omni Automation, which is basically AppleScript using JavaScript syntax; the problem is waiting for an app to launch, then figuring out where your data is hidden inside some giant structure like app.documents[0].canvases[0].graphics[2] (example from omni docs ), just so you can extract it for your script.

Brent Simmons is working on Rainier/Ballard, which is a reimagining of Dave Winer's Frontier. I think building a new siloed language and system doesn't solve the real problem, but maybe it'll get taken up by others.

I have for some time been toying with enhancing my Learn2JS shell into an Electron application that would let you write, load, save, and run scripts in a common framework, without any of the boilerplate it needs now. A pure JS shell is just too limited around file and network access, and node by itself is too low-level to get any useful work done. I'm not sure how that works with everything else in your system. While browser localStorage of 2MB or so is sufficient for many purposes, you really want to save local files. While this doesn't force data into outlines, it makes code-as-data easy, and JavaScript Object Notation (JSON) encourages storing everything as big trees of simple objects, which your functions operate on.

(I'm having fun with Scheme as a logic puzzle; but it's not anything I'd inflict on a "normal" person trying to work on data that matters).

If you want to talk about doing more with this, reach me @mdhughes on Fediverse.