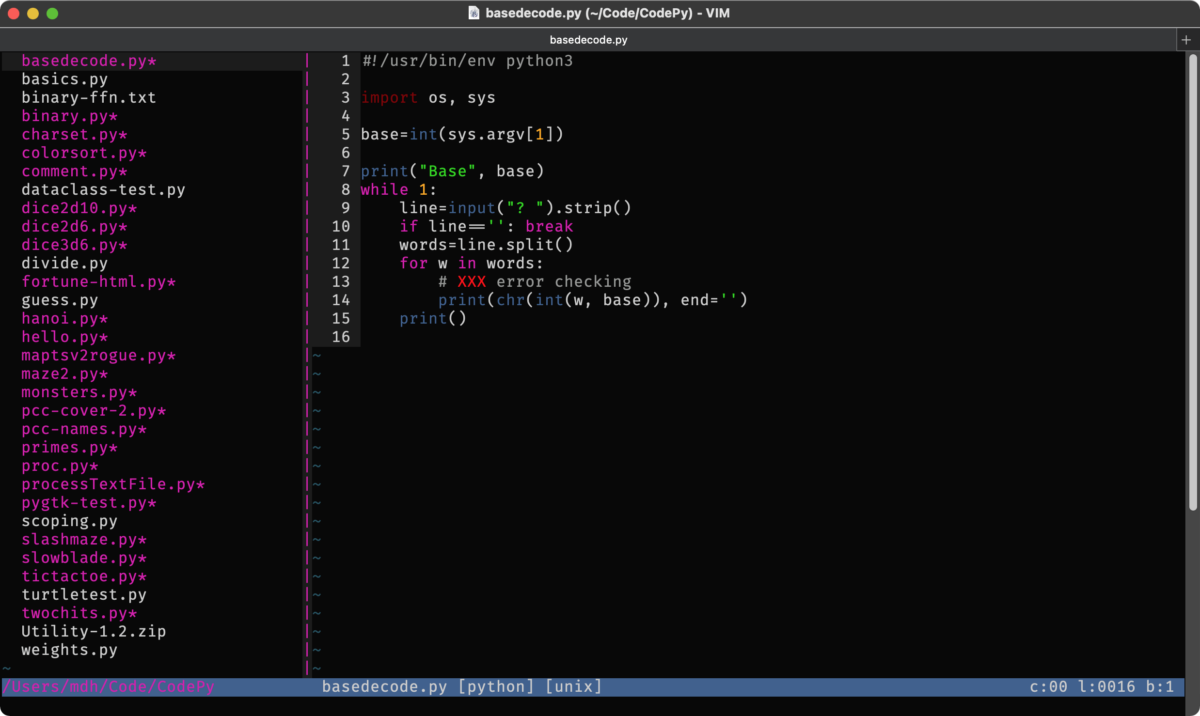

So I figured I should modernize my Vim skills, from 1995 to 2023. A lot's changed since I last configured Vim.

Installed a modern MacVim, in my case sudo port install MacVim. It's launched with mvim, but I just change alias v=mvim in my .zshrc

In the code blocks below, ~% is my shell prompt, ## filename shows the contents of a file, cat into it or whatever. Neither of those lines belong in the file!

To start, I want to use vim9script. So my old .vimrc now starts with that mode command, then I changed all my comments from " to #. Not much else had to change. The way to detect MacVim etc is clearer now, and I can get ligatures from Fira Code!

Syntax highlighting files can just be dropped in ~/.vim/syntax/

Update 2023-04-11: added statusline highlight colors, under syntax loading

## .vimrc

vim9script

# Mark Damon Hughes vimrc file.

# Updated for Vim9, 2023-04-09

#

# To use it, copy it to ~/.vimrc

# Note: create ~/tmp, ~/.vim, see source commands below.

set nocompatible # Use Vim defaults (much better!)

filetype plugin on

set magic

set nrformats=

set errorbells

set nomore wrapscan noignorecase noincsearch nohlsearch noshowmatch

set backspace=indent,eol,start

set nosmarttab noexpandtab shiftwidth=8 tabstop=8

set encoding=utf-8 fileencoding=utf-8

set listchars=tab:__,eol:$,nbsp:@

set backup backupdir=~/tmp dir=~/tmp

set viminfo='100,f1,<100

set popt=header:2,number:y # 2=always

set tw=80 # I use this default, and override it in the autogroups below

# ctrl-] is used by telnet/ssh, so tags are unusable; i use ctrl-j instead.

set tags=./tags;/

map <c-j> <c-]>

# Don't use Ex mode, use Q for formatting

map Q gq

map <Tab> >>

vmap <Tab> >

map <S-Tab> <<

vmap <S-Tab> <

# Always have syntax highlighting on

syntax on

# https://github.com/mr-ubik/vim-hackerman-syntax

# changed:

# let s:colors.cyan = { 'gui': '#cccccc', 'cterm': 45 } " mdh edit

# let s:colors.blue = { 'gui': '#406090', 'cterm': 23 } " mdh edit

source $HOME/.vim/syntax/hackerman.vim

set laststatus=2 # 2=always

# %ESC: t=filename, m=modified, r=readonly, y=filetype, q=quickfix, ff=lineending

# =:right side, c=column, l=line, b=buffer, 1*=highlight user1..9, 0=normal

set statusline=\ %t\ %m%r%y%q\ [%{&ff}]\ %=%(c:%02c\ l:%04l\ b:%n\ %)

set termguicolors

hi statusline guibg=darkblue ctermbg=1 guifg=white ctermfg=15

hi statuslinenc guibg=blue ctermbg=9 guifg=white ctermfg=15

hi Todo term=bold guifg=red

# Use `:set guifont=*` to pick a font, then `:set guifont` to find its exact name

set guifont=FiraCode-Regular:h16

if has("gui_macvim")

set macligatures

set number

elseif has("gui_gtk")

set guiligatures

set number

endif

set guioptions=aAcdeimr

set mousemodel=popup_setpos

set numberwidth=5

set showtabline=2

augroup c

au!

autocmd BufRead,BufNewFile *.c set ai tw=0

augroup END

augroup html

au!

autocmd BufRead,BufNewFile *.html set tw=0 ai

augroup END

augroup java

au!

autocmd BufRead,BufNewFile *.java set tw=0 ai

augroup END

augroup objc

au!

autocmd BufRead,BufNewFile *.m,*.h set ai tw=0

augroup END

augroup php

au!

autocmd BufRead,BufNewFile *.php,*.inc set tw=0 ai et

augroup END

augroup python

au!

autocmd BufRead,BufNewFile *.py set ai tw=0

augroup END

augroup scheme

au!

autocmd BufRead,BufNewFile *.sls setf scheme

autocmd BufRead,BufNewFile *.rkt,*.scm,*.sld,*.sls,*.ss set ai tw=0 sw=4 ts=4

augroup END

Package Managers & Snippets

Next I need a package manager. I've settled on vim-plug as complete enough to be useful, not a giant blob, and is maintained. There's at least 7 or 8 others! Complete madness out there

(I've already picked one, I don't need further advice, and will actively resent you if you give me any. I'm just pointing at the situation being awful.)

Install's easy, drop it in autoload, mkdir -p ~/.vim/plugged

The first thing I want is a snippet manager, and SnipMate's the best of those. Edit .vimrc at the end, set your "author" name, it's used by several snippets.

## .vimrc

call plug#begin()

Plug 'https://github.com/MarcWeber/vim-addon-mw-utils'

Plug 'https://github.com/tomtom/tlib_vim'

Plug 'https://github.com/garbas/vim-snipmate'

Plug 'https://github.com/honza/vim-snippets'

g:snips_author = 'Mark Damon Hughes'

g:snipMate = { 'snippet_version': 1,

'always_choose_first': 0,

'description_in_completion': 1,

}

call plug#end()

Next part's super annoying. It needs a microsoft shithub account; I made a new one on a throwaway email, but I don't want rando checkouts using my real name. includeIf lets you choose between multiple config sections, so now I have:

## .gitconfig

[include]

path = ~/.gitconfig-kami

[includeIf "gitdir:~/Code/"]

path = ~/.gitconfig-mark

## .gitconfig-kami

[user]

name = Kamikaze Mark

email = foo@bar

## .gitconfig-mark

[user]

name = Mark Damon Hughes

email = bar@foo

~% git config user.name

Kamikaze Mark

~% cd ~/Code/CodeChez

~/Code/CodeChez% git config user.name

Mark Damon Hughes

But shithub no longer has password logins! FUCK.

~% sudo port install gh

~% gh auth login

Follow the prompts and it creates a key pair in the system keychain. I hate this, but it works (on Mac; Linux install the package however you do, it works the same; Windows you have my condolences).

Now vim, :PlugInstall, and it should read them all. I had to do it a couple times! Then :PlugStatus should show:

Finished. 0 error(s).

[====]

- vim-addon-mw-utils: OK

- vim-snipmate: OK

- vim-snippets: OK

- tlib_vim: OK

Let's create a snippet!

~% mkdir .vim/snippets

## .vim/snippets/_.snippets

snippet line

#________________________________________

snippet header

/* `expand('%:t')`

* ${1:description}

* Created `strftime("%Y-%m-%d %H:%M")`

* Copyright © `strftime("%Y")` ${2:`g:snips_author`}. All Rights Reserved.

*/

And if I make a new file, hit i (insert), line<TAB>, it fills in the snippet! If I type c)<TAB>, it writes a copyright line with my "author" name; it's highlighted, so hit <ESC> to accept it (help says <CR> should work? But it does not). Basically like any programmer's editor from this Millennium.

Update 2023-07-24: Added header, which is my standard document header, expand is filename with extension, rest are self-explanatory. Sometimes I add a license, which SnipMate preloads as BSD3, etc.

Use :SnipMateOpenSnippetFiles to see all the defined snippet files.

File Tree

NERDTree seems useful; read the page or :help NERDTree for docs. Add another plugin in .vimrc just before call plug#end(), do a :PlugUpdate, and it's that easy. But I want to hit a key to toggle the tree, and another key to focus the file, which takes me into the exciting world of vim9 functions.

## ~/.vimrc

Plug 'https://github.com/preservim/nerdtree'

# open/close tree

def g:Nerdtog()

:NERDTreeToggle

wincmd p

enddef

nnoremap <F2> :call Nerdtog()<CR>

# focus current file

nnoremap <S-F2> :NERDTreeFind<CR>

Update 2023-04-11: In NERDTree, on a file, hit m for a menu, and you can quicklook, open in Finder, or reveal in Finder, and much more. Doesn't seem to be a right-click or anything functionality, so it was not immediately obvious how to make it open my image files, etc.

And I think that's got me up to a baseline modern functionality.