math (+1 to count the 1st)

So, checks out. 10,000 days of the September That Never Ended.

The world since is like a movie showing a few people coughing before the credits, wipe fade, zombie hordes tearing down barricades to eat the brains of the last few people. Someone's shivering in the corner with a gun, for the zombies or self, you can't tell. Freeze frame. "I bet you're asking how we got here…"

Note: I, uh, kinda infodumped here. Estimated reading time: 19 minutes.

What Went Wrong

At the time, I had a nice Gopherhole, finger and .plan (at times with a GIF of me uuencoded into it!), and was already annoyed by the overcomplicated World Wide Web rising. But in Feb 1993, UMinn saddled Gopher with threats of a license, which killed the better-organized system, and I was an adaptable guy. For quite a while I had both with equivalent content mirrored, but then my WWW site got more features, and the Gopher hole got stale so I closed it.

A bunch of new kids invaded USENET every September when school started, and commercial Internet started in '89-91 when NSFNet removed their commercial restrictions, and then fucking AOL unleashed bored neo-nazis from the flyover states on us. There was a vast onslaught of spam, bullshit, and trolls. So I switched from rn which had primitive killfile regexps ("PLONK is the sound of your name hitting the bottom of my killfile"), to trn, which had threading and a little better killfile system, to strn which had scoring so if you hit multiple good or bad keywords, you'd move up or down my queue or vanish. I bailed on all the big groups, tried moderation and was promptly attacked by scumbags who thought the moderation system was for protecting their corporate masters, not stopping spam, and then quit entirely.

We don't even have FAQs now. There's no netiquette at all (ha, Brittanica, remember them? Site's probably not been touched since 1999). I hide off to the edges in Mastodon with very aggressive blocking of anyone who looks annoying. The big media sites, Twaddler and Fuckbook, are just poison, an endless scroller of screaming between everyone who wants to feel offended all the time, and the Orange Shitgibbon's mob of traitors; I see a very little of Twaddler by way of RSS, but I won't go any closer than that.

The Web. On most sites, there's megabytes of crappy scripts for tracking, style sheets, giant custom fonts instead of banners & buttons burned into GIFs, so a page might take 100MB to show anything. The basic World Wide Web experience of click a link, page shows you slightly formatted text on an unpleasant background, click another link, is unchanged from 1993, but there's a dumpster of shit on top of that. I hate using the Web now, every goddamned page wants to track me, bounce banners up in front of me, demand I approve cookies but don't let me say "DENY ALL FUCK YOU"; and even without cookies, they use fingerprinting to track me.

It doesn't have to be like this. Despite using WordPress, the dumbest and most bloated thing possible, I've tried to keep my site down to a minimal setup, go read the page source, it's just CSS, content, and the search widget. If I ever get around to purging the default CSS, it'll be even lighter. But most people not only don't live up to that ethic, they aggressively want the opposite, the biggest, fattest, most unusable crap site full of autoplaying videos they can make.

Criminals being able to use the Internet to attack physical infrastructure, or hostile encryption of computers (including in hospitals; some people need a stern talking to with a 2x4 or a shotgun). Back in the day, RTFM's worm was a novel disaster, but fixable. Microsoft's garbage OS was trivially infected with viruses then and now, but back then it didn't matter much; you might lose a few un-backed-up files, not real money.

The Internet as trivial research device seems like it should be good, but what it's meant is that the Kids Today™ don't bother to learn anything, they just look up and recite Wikipedia, which is at least 50-80% lies. They "program" by searching StackUnderflow for something that looks like their problem, pasting it in, then searching again to solve the error messages. Most of them could be replaced with a Perl script and wget. I assume non-programming fields are similarly "solve it by searching", which is why infrastructure, medicine, and high-speed pizza delivery are so far inferior to 28 years ago.

Search was very slow and mostly manually-entered into index sites back in the '90s. Now it's very fast, but only things linked from corporate shitholes actually show up, and spam and SEO poison all the results, so all you really get is Wikipedia, which might have a few manually-entered links at the bottom which might still exist or be in archive.org, or a few links to spam. Try searching for anything, it's all crap.

Vernor Vinge in 1992's A Fire Upon the Deep called a 50,000-years-from-now version of USENET "The Net of a Million Lies". Just a bit of an overshoot on the date, and a massive underestimate of the number of lies.

There's a lot of knock-on effects from the Internet as a sales mechanism. Like, videogames used to get QA tested until they mostly worked; fiascos like Superman64 were rare. Now, Cyberpunk2077 ships broken because they can patch it off the Internet, won't be fixed until actual 2077. Sure, not all games. I'm usually satisfied with Nintendo's QA, though even Animal Crossing: New Horizons shipped with less functionality and more bugs than Wild World on the (no patches!) DS cartridge.

What Is Exactly the Same

IRC, war never changes. I used ICB for my social group back then, and we moved from there to Slack. Most technical crap is discussed on IRC, rarely on Slack, Matrix, or Discord (which literally means conflict). Doesn't matter, it's just a series of text messages, because nobody's figured out how to make anything better that lasts.

I'm still using some version of UNIX. If you'd told me in 1993 that I'd be a Mac guy, I'd've opened your skull to see what bugs had infested your brain; Macs were only good for Photoshop and Kai's Power Tools. But Linux never got better, BSD is functional but never got a great desktop, SUN and SGI are dead <loud sustained keening wail>, and Apple bought/reverse-takeovered NeXT with a nice enough BSD-on-Mach UNIX. And the Internet is, largely, UNIX. There was a horrible decade mid-90s to early-00s when Windows servers were gaining ground, people were ripping out perfectly good UNIX data centers to install garbage at a huge loss in efficiency because their CTOs got bribed millions by Microsoft. But that tide washed up and back out taking most of the MS pollution with it. Maybe it won't be back.

I still write web sites in Vim or BBEdit (since 1993: It Doesn't Suck™). Well, I say that, but I'm writing this mostly in the WordPress old text editor, using Markdown. Markdown's new-ish (2004), but behaves like every other text markup system going back to SGML in the '80s and ROFF in the '70s.

What's Good About the Internet

Not fucking much.

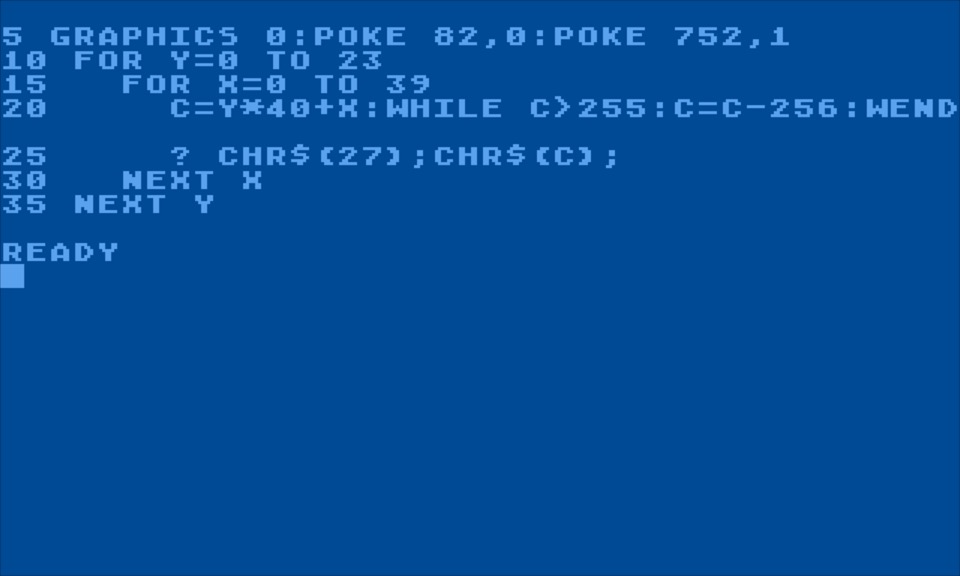

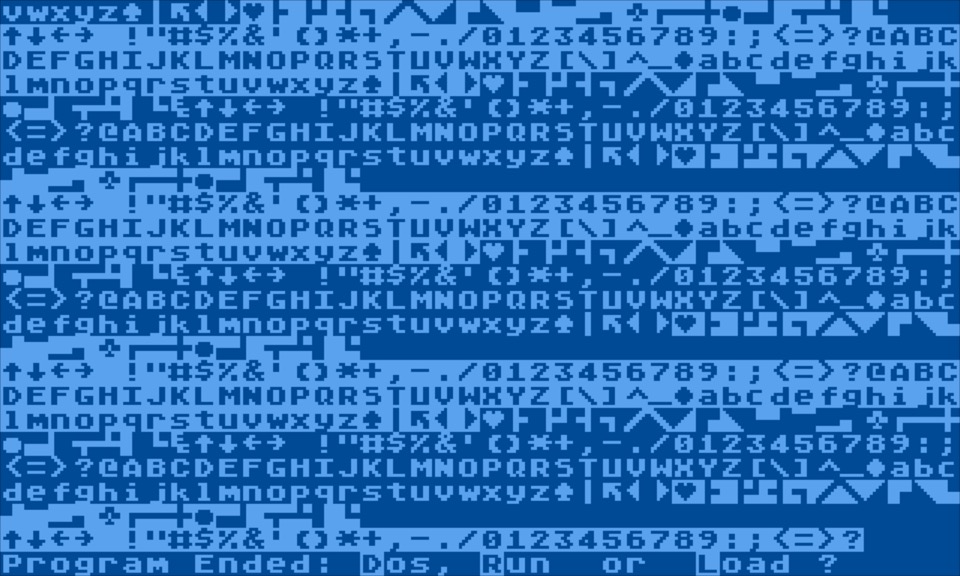

Streaming or borrowing digital copies of music, movies, and books is easier than ever. I speak mainly of archive.org, but sure, there's less-legal sites, too. I have access to an infinite library, of whatever esoteric interest I have; I've lately been flipping through old Kilobaud Magazine as part of my retrocomputing; I like the past where just getting or using a computer was hard and amazing. In 1993 those might have been mouldering away in a library basement, if they could be found at all. Admittedly, I hate most new media; nothing's been good enough for Mark since 1999, and really I could put the line at grunge, or maybe 1986 when The Police broke up. But at least it is accessible.

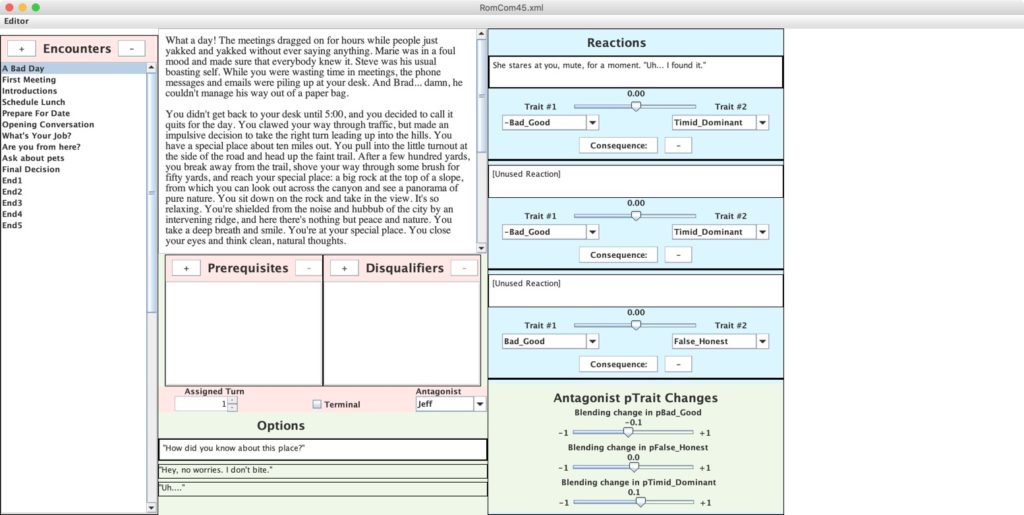

I spent most of today writing new stuff for the Mystic Dungeon, and even with all the overcomplicated web shit, it's a little easier to build a secure, massively parallel message system in JS than it was in C or Perl 30 years earlier. Not by much, but some.

Internet pornography (link barely NSFW?) is a tough one. '70s-80s VHS porn was expensive, flickery, way too mainstream; fine if you liked chunky old guys banging ugly strippers, I did not. DVD porn in the '90s was still expensive, but got much better production, and every niche interest, that was the golden age. But now everything is "free" on the thing-hubs and x-things, but only in crappy 6-minute excerpts stolen from DVD, horrible webcam streams, and the creepifyin' rise of incest porn. Because the Internet enables weird interests, but what if a whole generation have massive mommy/daddy issues? You can in fact pay for good non-incest porn, but payment processors and credit cards make it hard to do, so it's easier to just watch garbage. And then there's prudes and religious zealots who think porn is bad; in the old days, they had the law and molotov cocktails on their side, but now they're impotent, so I guess that's barely a win for the Internet.

What Didn't We Get

The Metaverse. OK, there was and is Second Life, but Linden fucked the economy up, and never made it possible to take your grid and host it yourself without a gigantic effort. There's WebVR and a few others, but they have terrible or no avatars, construction, and scripting tools. We should be able to be scanned and be in there, man, like in TRON.

The Forum. There's no place of polite social discourse. There's hellsites, and some sorta private clubs, and a bunch of abandoned warehouses where people are chopped up for body parts/ad tracking. Despite my loathing of Google, who are clearly trying to implement SkyNet & Terminators and exterminate Humanity, Google+ was OK, so of course they shut it down.

The Coming Golden Age of Free Software That Doesn't Suck. Turns out, almost everyone in "FLOSS", the FSF, and GNU, are some of the shittiest people on Earth, and those who aren't are chased out for daring to ask for basic codes of conduct and democracy. Hey you know that really good file system? Yeah, the author murdered his wife, and the "community" is incompetent to finish the work, so keep using ext which eats your files. Sound drivers on Linux, 16 years after I ragequit because I couldn't play music and alarm sounds at the same time, still don't work. "Given enough eyes, everyone goes off to write their own implementation instead of fixing bugs"; nothing works, every project just restarts at +1 version every 2-5 years. Sure, you can blame capitalism, but there's a couple of communist countries left, why aren't they making infinitely better software without the noose of the dollar dollar around their necks?

The Grand Awakening of Humanity. This was always delusional, but the idea that increased communication between people of Earth would end war, everyone would come together, align their chakras/contact the UFOs, and solve all our problems. Ha, no, you put 3 people in a chat room and you'll have 5 factions and at least one dead body in a week. As we approach 7 billion people online, many with explosively incompatible and unfriendly views, this is only going to get worse, if that's even imaginable.

Final Rating: The Internet

★★½☆☆ — I keep watching this shitshow, but it's no damn good. Log off and save yourself.