Tag: nerd

Ready Player One

I loved the book of Ready Player One. It plays with deep matters of '80s nerdery, namely original and "Advanced" Dungeons & Dragons and especially S1 Tomb of Horrors, old microcomputer, arcade, and home video games (and the very different kinds of games on them), and Rush's more esoteric albums. It's kind of incomprehensible if you weren't alive in the '70s and '80s and into these specific things. It's pretty brilliant if you were. It's a story of logic puzzles, careful research, and follows much of the story structure of WarGames.

★★★★★

The movie is none of these things. It's a very pretty film, largely CGI inside the OASIS MMO, but replaces the intellectual challenges with a very stupid car race; a very precise and funny adaptation of a cinemaphile but not geek movie which was NOT in the book and very out of Halliday's interests; and a final battle, well adapted in scale and craziness, but the final key being in… is this a spoiler if it's in section 0000 of the book? Adventure for 2600. Well, it's kind of too obvious to even mention, if you're looking for an Easter Egg. Did IQs drop sharply in the Spielberg-verse?

SUPER picky detail (but this is in fact what the book is about, being super picky): In the funeral/contest video, the quarters on James Halliday's eyes in the movie were, if my eyes did not deceive me, from 1972. Book says:

"High-resolution scrutiny reveals that both quarters were minted in 1984."

Why change it? Because either they didn't care, or because Spielberg is literally older than dirt, older than rocks, older than "Steven Spielberg is old" jokes, so old that he thinks 1972 is "better" than 1984 (it is not). Everything else about the funeral video is wrong, too, but that's beside my point here about picky detail.

Ogden appears like a Willy Wonka at the end, in a fairly crappy, formulaic ending. It's fucking Spielberg, so you know it's going to be schmaltzy and fall apart at the end, but the extent of the failure is almost epic. The hobbits^W corporate research drones cheering Wade at the end is nonsense filmmaking.

The music varies from great '80s pop music, sometimes in appropriate places; a few pieces of '80s soundtrack music in exactly the right place; to poorly-timed, almost counterproductive incidental music. I loathe Saturday Night Fever, as previously mentioned, and having another dance scene based on it is annoying; the book does mention "Travoltra"[sic] dancing software, but you don't have to see or hear it. I felt nothing from the incidental music. Did Spielberg go deaf in his extreme old age? His old films at least had good scores, but this was vapid.

The final "rule" of disabling the OASIS, the global center of business, education, and entertainment, on Tuesday and Thursday is so stupid only a very stupid old filmmaker could conceive of it.

There is no Ferris scene after the credits, which would have been a great place to at least leave us smiling, instead of "huh, that was not good".

It lacks the brains, heart, and music of a classic '80s film. Go watch TRON or WarGames instead.

★★½☆☆ only because it is so very pretty, ★☆☆☆☆ for plot. Validates my movie policy that book adaptations are always worse than the book, and adds a new one: Don't watch anything by Steven Spielberg. Will some kind nursing home attendant not just put a pillow over his face and end our suffering?

How Much Computer?

I learned to program on a TRS-80 Model I. And for almost any normal need, you could get by just fine on it. You could program in BASIC, Pascal, or Z80 assembly, do word-processing, play amazing videogames and text adventures, or write your own.

There's still people using their TRS-80 as a hobby, TRS-80 Trash Talk podcast, TRS8BIT newsletter, making hardware like the MISE Model I System Expander. With the latter, it's possible to use it for some modern computing problems. I listen to the podcast out of nostalgia, but every time the urge to buy a Model I and MISE comes over me, I play with a TRS-80 emulator and remember why I shouldn't be doing that.

If that's too retro, you can get a complete Raspberry Pi setup for $100 or so, perfectly fine for some light coding (hope you like Vim, or maybe Emacs, because you're not going to run Atom on it), and most end-user tasks like word processing and email are fine. Web browsing is going to be a little challenging, you can't keep dozens or hundreds of tabs open (as I insanely do), and sites with creative modern JavaScript are going to eat it alive. It can play Minecraft, sorta; it's the crippled Pocket Edition with some Python APIs, but it's something.

I'm planning to pick one up, case-mod it inside a keyboard, and make myself a retro '80s cyberdeck, more as an art project than a practical system, but I'll make things work on it, and I want to ship something on Raspbian.

"It was hot, the night we burned Chrome. Out in the malls and plazas, moths were batting themselves to death against the neon, but in Bobby's loft the only light came from a monitor screen and the green and red LEDs on the face of the matrix simulator. I knew every chip in Bobby's simulator by heart; it looked like your workaday Ono-Sendai VII, the "Cyberspace Seven", but I'd rebuilt it so many times that you'd have had a hard time finding a square millimeter of factory circuitry in all that silicon."

—William Gibson, "Burning Chrome" (1985)

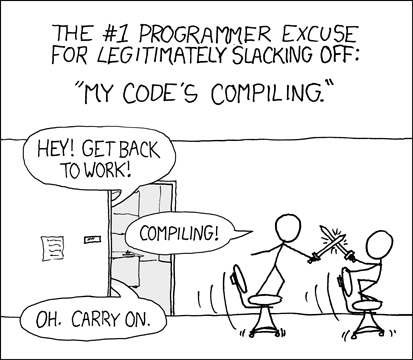

Back in the day, I would work on Pascal, C, or Scheme code in a plain text editor (ed, vi (Bill Joy's version), or steVIe) all morning, start a compile, go to lunch, come back and read the error log, go through and fix everything, recompile and go do something else, repeat until I got a good build for the day. Certainly this encouraged better code hygiene and thinking through problems instead of just hitting build, but it wasn't fun or rapid development. So that's a problem with these retro systems; the tools I use take all RAM and CPU and want more.

These days, I mostly code in Atom, which is the most wasteful editor ever made but great when it's working. I expect my compiles to take seconds or less (and I don't even use the ironically-named Swift). When I do any audio editing (in theory, I might do some 3D in Unity, or video editing, but in practice I barely touch those), I can't sit there trying an effect and waiting minutes for it to burn the CPU. And I'm still and forever hooked on Elder Scrolls Online, which runs OK but not highest-FPS on my now-3-year-old iMac 5k.

For mobile text editing and a little browsing or video watching, I can use a cheap iPad, which happily gets me out of burning a pile of money on laptops. But I'm still stuck on the desktop work machine, I budget $2000 or more every 4 years for a dev and gaming Mac. Given the baseline of $8000 for an iMac Pro I'd consider useful, and whatever more the Mac Pro is going to cost, I'd better get some money put together for that.

I can already hear the cheapest-possible-computer whine of "PC Master Race" whom I consider to be literal trailer trash Nazis in need of a beating, and I'd sooner gnaw off a leg than run Windows; and Lindorks with dumpster-dived garbage computers may be fine for a little hobby coding, but useless for games, the productivity software's terrible (Gimp and OpenOffice, ugh), and the audio and graphics support are shit. The RasPi is no worse than any "real" computer running Linux.

'80s low-end computers barely more than game consoles were $200, and "high-end" with almost the same specs other than floppy disks and maybe an 80-column display were $2500 ($7921 in 2017 money!!!), but you simply couldn't do professional work on the low-end machines. Now there's a vast gulf of capability between the low-end and high-end, the price difference is the same, and I still need an expensive machine for professional work. Is that progress?

Data and Reality (William Kent)

A book that's eternally useful to me in modelling data is William Kent's Data and Reality. Written in what we might call the dark ages of computing, it's not about specific technologies, but about unchanging but ever-changing reality, and strategies to represent it. Any time I get confused about how to model something or how to untangle someone else's representation, I reread a relevant section.

The third ambiguity has to do with thing and symbol, and my new terms

didn’t help in this respect either. When I explore some definitions of

the target part of an attribute, I get the impression (which I can’t

verify from the definitions given!) that the authors are referring to

the representations, e.g., the actual four letter sequence “b-l-u-e”,

or to the specific character sequence “6 feet”. (Terms like “value”,

or “data item”, occur in these definitions, without adequate further

definition.) If I were to take that literally, then expressing my

height as “72 inches” would be to express a different attribute from

“six feet”, since the “value” (?) or “data item” (?) is different. And

a German describing my car as “blau”, or a Frenchman calling it

“bleu”, would be expressing a different attribute from “my car is

blue”. Maybe the authors don’t really mean that; maybe they really are

willing to think of my height as the space between two points, to

which many symbols might correspond as representations. But I can’t be

sure what they intend.

—Bill Kent

I originally read the 1978 edition in a library, eventually got the 1998 ebook, and as of 2012 there's a posthumous 3rd edition which I haven't seen; I would worry that "updated examples" would change the prose for the worse, and without Bill having the chance to stop an editor.

See also Bill Kent's website for some of his photography and other papers.

This book projects a philosophy that life and reality are at bottom

amorphous, disordered, contradictory, inconsistent, non- rational, and

non-objective. Science and much of western philosophy have in the past

presented us with the illusion that things are otherwise. Rational views

of the universe are idealized models that only approximate reality. The

approximations are useful. The models are successful often enough in

predicting the behavior of things that they provide a useful foundation

for science and technology. But they are ultimately only approximations

of reality, and non-unique at that.This bothers many of us. We don’t want to confront the unreality of

reality. It frightens, like the shifting ground in an earthquake. We are

abruptly left without reference points, without foundations, with

nothing to stand on but our imaginations, our ethereal self-awareness.So we shrug it off, shake it away as nonsense, philosophy, fantasy. What

good is it? Maybe if we shut our eyes the notion will go away.

—Bill Kent

★★★★★

You Have Updates

WordPress just told me I have a bunch of updates, so I pushed the button, at my convenience. iPhone has a bunch of updates, but I certainly don't have autoupdate turned on, I'll look at those when I feel like it.

I don't understand how people put up with their software deciding to "update" on them without permission.

Mac is insistent with the "updates waiting" dialog, but you can tell it to fuck off indefinitely.

Linux is like a broken car you have to go dumpster-diving to find new parts for.

But Windows breaks into your home to change your shit around. Just no.

W3C DRM OMGzors

Oh sweet zombie jesus the DRM whining again? You can have fucking Flash (you goddamned savages), or you can have DRM and a nice native player. Ebooks and downloaded music are mostly watermarked and DRM-less (except on Kindle), but you can't do that on the fly with video encoding.

You aren't going to convince Sony/Netflix/etc to just give you non-DRM copies of a $100M budget movie or series. And once in a while I like a Guardians of the Galaxy, Inception, or Justified. If you don't, the presence or absence of DRM in the browser makes zero difference to your life. You're just bitching about something that doesn't affect you.

For a slightly more calm, less profane explanation, read Tim Berners-Lee's post.

The Land Before iPhone

In which millennials try to recall kindergarten pre-iPhone

iPhone was nice, but not a big change to my lifestyle; I already had a Treo, and before that a LifeDrive, and before that a Palm III, and had Internet since before I was boinking those kids' mothers. I was basically the model for the Mondo 2000 "R.U. A Cyberpunk" poster (the joke being R.U.Sirius was… nevermind), and yes, I read Mondo2k & Wired before they were dead and/or uncool.

Levelling Up

Pseudo-Nerds who make the argument "exercise is like levelling up!" are not very good players. Not Meta.

After sleep, food, life bullshit, email, meetings, etc., you get at best 8 XP (hours) and more often just 4, per day to work on whatever skills you like.

If you spend 1-2 per day on PE, you're wasting 12.5-25% of your potential just to multiclass as Nerd/Jock, rather than those who spend all of their XP levelling Nerd skills.

MP3 Considered Harmful

People bitching about "death of MP3" articles made me look:

NPR on MP3

Which is actually quite reasonable. I haven't deliberately touched MP3 in a decade, because AAC sounds better in 2/3 the space (typically 256k AAC is better than 320k MP3). I keep some original rips or purchased music in FLAC, but that's still unusably large on an iPod or iPhone.

MP3 was designed to encode "Tom's Diner" and sounds worse the further you get from that.

The MP3 patents expired in April 2017 (maybe: with continuations and overlapping patents it's hard to be sure), which means ideologues who don't want to pay for software other people invented, can now use MP3 instead of the noxious OGG format which sounds like a backfiring car; they still won't use AAC or anything else from this century. But for everyone else, it means we continue using better formats whenever possible.