So, up front: This is a trashy show in a lot of ways, that's trying to be much, much higher and mostly failing. It exists so someone who wasted their college tuition reading philosophers can pick up a paycheck name-dropping Kant in each script; Kudos to that guy for finding a way to make philosophy pay. (disclosure: I also read philosophers in college and since, mostly on my own time, and never tried to extract money for it.) But it is not written as a philosophy treatise, though it occasionally tries; mostly it's just a dumb sitcom.

The main cast are a trashy girl-next-door mean chick, a hot chick with an English accent I hate, a philosophy nerd (irony/shitty writing: black guy, entirely teaching from texts written by old white guys, all but the latest of whom kept slaves; not a single non-honky philosophy is ever discussed), and a moron, trying to survive an afterlife where they don't quite seem to belong, run by well-past-sell-date Ted Danson and a slightly frumpy robot girl (who says she's neither), in standard sitcom cycles (literally: There's mental reboots that happen so episodes can restart at the beginning), though it does change up the formula eventually. I do like the hot chick and the mean chick; they have character. Maybe the robot girl, even limited by her role. Sadly, the nerd is one-note, the moron is barely able to breathe in and out without electric shocks, the ancient stick-figure of Ted Danson is stiff and overacts when he does break being stiff.

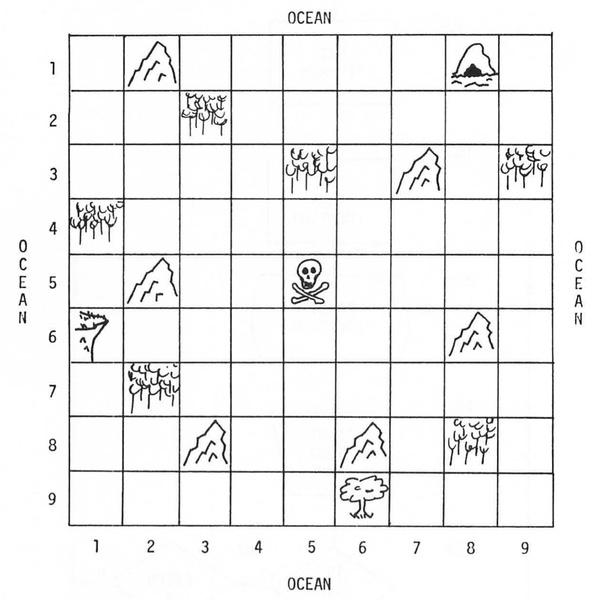

The key premise of the show is that you earn "points" by your actions in life, which sorts you into "The Good Place" or "The Bad Place". There's, uh, roughly everything wrong with this.

Obviously first, there's no magical afterlife. It makes no sense: There's no evolutionary advantage to an afterlife, and Humans being the only animals who can rationalize and make up stories to deal with our fear of death is infinitely more likely than that a magic sky fairy suddenly gifted Homo sapiens with an invisible remote backup system. When you die, your brain patterns rot and the program that was you ceases to be recoverable in about 5-10 minutes. There's probably nothing like an Omega Point or Roko's Basilisk for the same reason; that information won't survive from the current hot period of the Universe to the long cold efficient computational period, so no AI can reconstruct you. I'm as sad and angry about this as anyone, but I don't delude myself.

Second, even if we say "YER A WIZARD HARRY" and you have a magical afterlife, it's populated by immortal beings (IB), somehow. Where do they come from? How does that evolve? How do they get magical powers? If Humans can get a half-measure of sanity and wisdom by 40, 60, 80 years, every IB should be perfectly enlightened and know every trick and skill possible by 1000, 100000, 13.5 billion years old. The IBs shown are as stupid and easily-tricked as Humans, when you get to The Actual Plot of this show. To pick the exact opposite of this show, Hellraiser had an internally consistent magical afterlife: "Hell" is an alien universe inhabited by Cenobites with a wide range of power, whose experiences are so powerful that they would seem like torture to a Human; they collect Humans who seek that experience with magical devices, not to reward or punish meaningless behaviors on Earth; good or evil means nothing in Hellraiser.

Every IB in this show is insultingly stupid, repetitive physical tortures by frat boy demons, inferior to Torquemada's work here on Earth; farting evil robot girls; a neutral Judge too silly to be on a daytime TV show who only wants to eat her burrito. Low, low, lowest-fucking-brow comedy quite often.

Third, and most damning (heh), any system of morality with a scoring system then becomes solely about that scoring system. If "God and/or Santa are Watching" as Christians claim, you must act good according to the dictates of the Bible to score high enough to enter Heaven; it doesn't matter what's logically right and wrong, only the specific rules of an eternal sex-obsessed Middle-Eastern tyrant. Everyone who ate shellfish or wore mixed fibers or got a tattoo, forbidden by Leviticus, or failed to commit genocide & slavery when ordered by a prophet of God, as throughout the entire Old Testament, or masturbated to anyone but their lawfully wedded spouse, as forbidden by Jesus in Matthew 5:28, is gonna have a real bad eternity in Hell.

The scoring system for The Good/Bad Place makes it impossible to commit a "selfless" act unless you're a total moron (so, possibly the moron character, but he's unthinkingly rotten as often as nice). They treat this as a feature, as if you can only do good deeds when you can't see the score.

In philosophy without gods, you can choose to do good (try to define "good" in less than 10,000 pages…) instead of evil (same) because your personal or societal reward system is rigged that way (laws, in general), or because you selfishly want to look altruistic (maybe virtue-signalling to attract a mate), or because universalizing your behavior means you should selfishly do right to raise the level for everyone including yourself ("think global, act local"), or purely at random, and you have still done good deeds. While the ancient Stoics (especially my favorite, Marcus Aurelius ) respected piety to the immortalized Emperors and gods of the Pantheon, they didn't ask the gods for rules, they found a way to live based on reason, a modicum of compassion, and facing the harsh world that exists.

But once the authorities put in an objective score system in with infinite reward/punishment, you must act to maximize your score; there's no moral debate possible, you would just find the highest reward you can achieve each day and grind on it. Those born with the most wealth and privilege will be much more capable of raising their score instead of attending to life's necessities, so the rich get rewarded, the poor get punished.

This show seems to think Jiminy Cricket sits in your head as a quiet voice without any training, and you just have to listen to it to know good and evil. There's a discussion about Les Miserables re stealing bread (worth exactly -17 points), that's only used for mockery, but in real life that ambiguity is impossibly hard to make rules for.

I liked Eleanor and Tahani, and sometimes Michael, playing off each other enough to keep watching this through S2, but every time Chidi speaks I roll my eyes and wish that just once he'd reference someone not on the Dead Honkys shelf; especially not Prussian Immanuel Kant who wrote some of the earliest texts on "scientific racism", including such gems as "The Negroes of Africa have by nature no feeling that rises above the trifling" (1764, Observations on the Feeling of the Beautiful and the Sublime). Fuck that guy.

★½☆☆☆